Alibaba Cloud has recently introduced two open-source large vision language models: the Qwen-VL and the Qwen-VL-Chat. These models are designed to understand both images and text, making them versatile tools for various tasks in both English and Chinese.

Qwen-VL understands images and texts

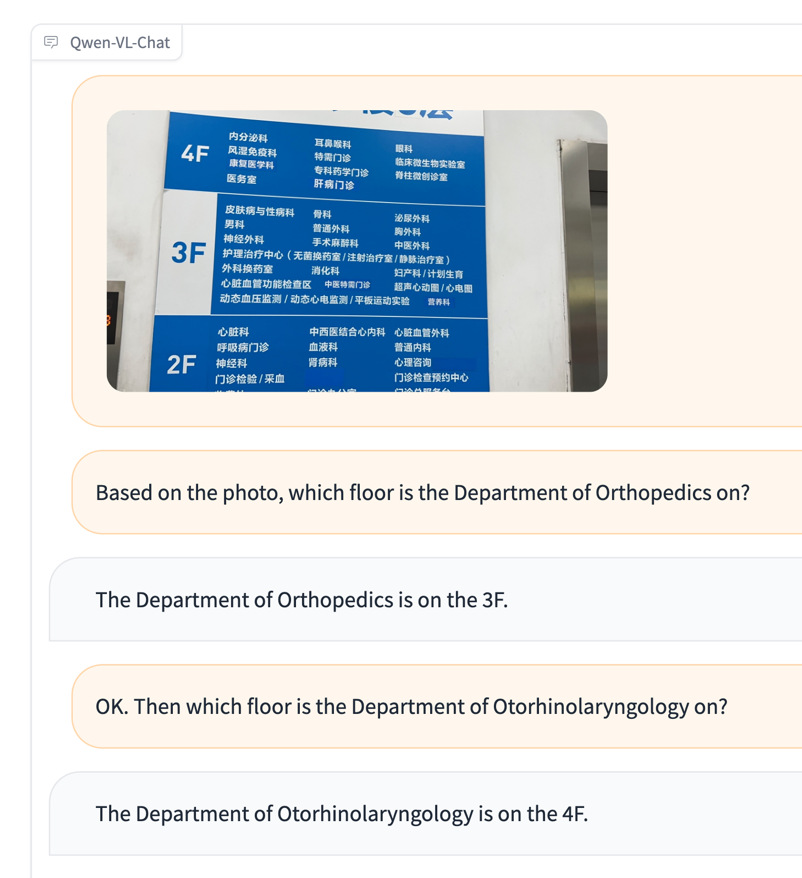

The Qwen-VL is an extension of Alibaba Cloud’s own AI Chatbot, Tongyi Qianwen and is capable of comprehending image inputs and text prompts. It works like a smart assistant that can look at pictures and answer open-ended questions about them.

Alibaba Cloud stated Qwen-VL is capable of handling higher-resolution image inputs compared to other open-source models. It can also engage in various visual language tasks, including captioning, question answering, and object detection in pictures.

Qwen-VL-Chat can perform creative tasks

On the other hand, Qwen-VL-Chat takes things a step further by enabling complex interactions. It can perform creative tasks like writing poetry and stories based on input images, summarising the content of multiple pictures, and solving math problems displayed in images.

According to benchmark tests conducted by Alibaba Cloud, Qwen-VL-Chat excels in text-image dialogue and able to answer questions in both Chinese and English

Both open-source models are accessible globally

This is exciting news to the world because Alibaba Cloud is sharing these models with researchers and academic institutions worldwide. Alibaba Cloud made the code, weights, and documentation available for free.

This means more people can use these models for various tasks. Companies with over 100 million monthly active users can also request a licence for commercial use.

These models have the potential to change how we interact with images online. For example, they could assist visually impaired individuals with online shopping by describing products in images.

For more detailed information, you can read the full story on Alizila and find additional details about Qwen-VL and Qwen-VL-Chat on ModelScope, HuggingFace, and GitHub pages. The research paper detailing the model is also available here.