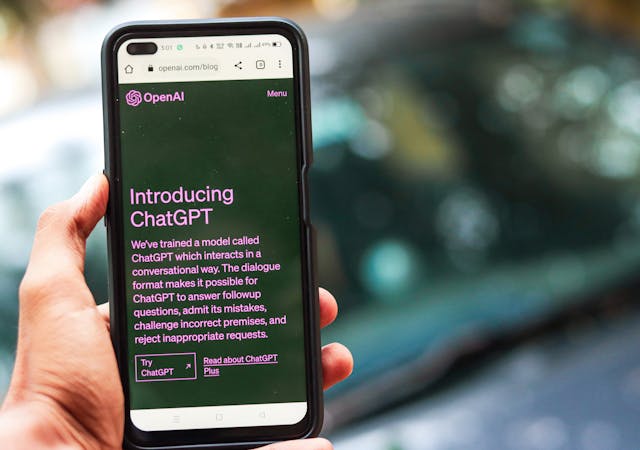

Open AI announces ChatGPT’s new o3-mini reasoning model that allows it to do more complex reasoning faster and more efficiently.

Recent Posts

- OPPO Enco X3s Debuts With Advanced ANC & Dynaudio Sound

- OPPO Launches ColorOS 16: Smarter AI, Seamless Smoothness, and Open Connectivity

- OPPO Unveils Find X9 Series, Bringing a 200MP Hasselblad Telephoto Camera and Massive Batteries

- Samsung Galaxy Z Flip7 5G In-Depth Review: Bigger and More Intelligent in All The Right Ways with Space for Possibilities

- The Digital Architects: How Leveraging AI is De-risking Our Clean Energy Future

Recent Comments

- user-465340 on Graphisoft is Designing the Future with Mindful Integration of Generative AI, Sustainability and Effiency

- Alan Shelby on Wondershare Repairit Online: A Free and Reliable Video Repair Platform

- Ally on Google Reveals the Pixel Fold, The Next Word in Foldables

- Carol science on HyperSense named in 2022 Gartner® Market Guide for Multipersona Data Science and Machine Learning Platforms

- Gina keveryn on YouTube Removes Public Dislike Count Visibility

Archives

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- August 2019

Categories