With AI booming in every sector, Dell Technologies weighs in on the strategic advantages of Sovereign AI and how Malaysia is able to leverage it.

Open AI Launches GPT-5 Claimed to Be Leaps Better than Previous Models

Open AI introduces GPT-5, it’s most advanced model yet bringing what the company calls a significant leap in reasoning and multimodal reasoning.

OpenAI Returns to Open-Source with New GPT-OSS Models

Open AI releases two new open source GPT models – gpt-oss-20b and gpt-oss-120b; signaling a huge step back to open development of GPT models.

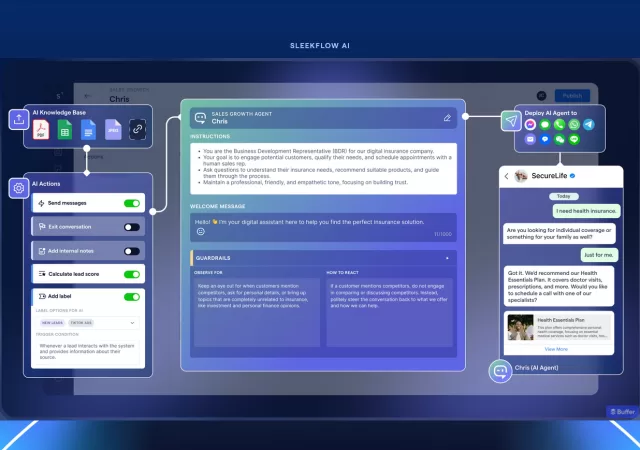

SleekFlow’s New AgentFlow AI Tool Leverages LLMs to Drive Conversions

SleekFlow announces a new Agentic AI, AgentFlow, designed to help businesses turn conversations into coversions.

Zepp Flow Makes Its Debut in Zepp OS 3.5 for the Amazfit Balance

Zepp Health announces a major update to Zepp OS – Zepp OS 3.5 – that brings AI integration in the form of Zepp Flow to the Amazfit Balance.

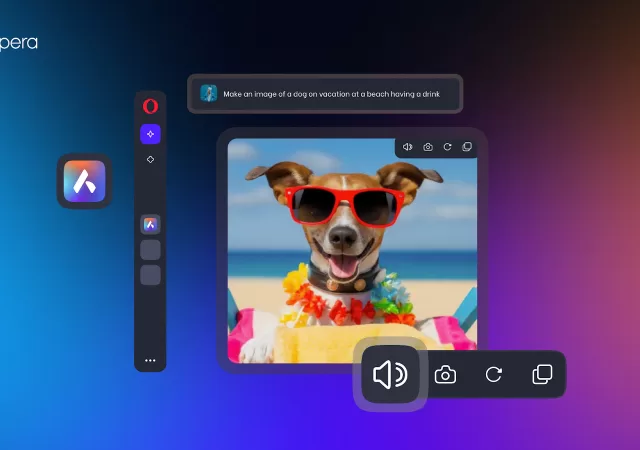

Opera Browser Gets AI Infusion with Google Cloud & Gemini

Opera Browser announces a partnership with Google Cloud to bring AI features to the popular web browser that run on Google’s Gemini generative AI Model.

Sony Music Issues Stern Warning Against Use of Its Artists’ Content for Training AI

Sony Music Entertain warns over 700 tech and streaming companies about using its artists’ content for training AI models.

OpenAI Unveils GPT-4o: A Sassier, More Robust Version of GPT-4

Open AI announces GPT-4 omni (GPT-4o), a more advanced model of GPT-4 capable of more than just text generation.