Mozilla integrates Firefox Relay into its Firefox browser as a native function allowing users to be able to take control of their privacy from the get go.

533 Million Facebook Users’ Data Resurfaces Online from 106 Countries

Facebook seems to be having a row of things recently. The company initially faced humongous backlash on their implementation of data sharing policies between popular messaging app, WhatsApp, and the larger company. Now, it looks like old wounds are reopening…

Google Comes Under Fire For Chrome’s Incognito Mode

Google comes under fire as users discover that Google Chrome’s incognito isn’t really incognito.

WhatsApp’s New Policies Are Coming into Effect, Here’s What Will Happen If You Haven’t Accepted

WhatsApp has detailed what will happen to users who do not accept their new privacy policy which requires them to share data with Facebook.

[Podcast] Tech & Tonic Special : Sitdown with Alex Tan of HID International about Biometrics and Security

In this special, we sat down with Mr. Alex Tan, the Director of Sales for the ASEAN Region for Physical Access Control Systems from HID Global. We had a conversation about some of the emerging trends in the industry on…

Is Privacy Our Sole Concern With Contact Tracing Technology?

This week the Guardian reported an alleged ‘standoff’ between the NHSX (the digital innovation arm of the NHS) and tech giants Google and Apple regarding the deployment of contact tracing technology aimed at curbing the spread of the Covid-19 virus.…

Tech & Tonic Episode 2 feat. Vernon Chan – Pre-Order, Cash, or Installment? Oh, Use Protection Everyone!

Episode two of Tech & Tonic we have a featured guest who is none other than Vernon Chan! He will be joining us today to talk about a few key aspects of how the local tech market is, to…

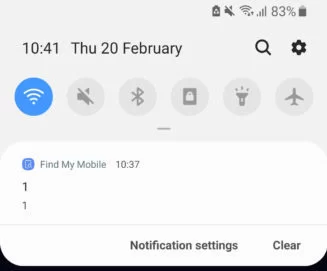

Samsung Find My Mobile Notification was a Data Breach

Update (26 February 2020): Samsung has reached out to SamMobile to clarify that the data breach wasn’t related to the Find My Mobile notification. Instead, the data breach was an isolated incident which occurred on the UK Samsung website. According…