AI 2.0 is driving a significant change in the way business messaging is targeted and done. Meta gives us some insight into how it is paving the way for it on Facebook and Instagram.

Vimigo Announces Development of New AI-Integrated HR Mobile App

Vimigo has secured RM2.25 million in funds and revealed its plans to develop an AI-powered HR mobile app, as well as the Asian market expansion.

Alibaba Cloud Announces New AI Image Generation Model, Tongyi Wanxiang

Alibaba Cloud has introduced its latest AI image generation model, Tongyi Wanxiang (‘Wanxiang’ means ‘tens of thousands of images’). This model is designed to help businesses improve their creativity by generating high-quality images across different art styles. Text-to-image generation The…

Copilot-ing the Future of Work with Generative AI

Microsoft’s Work Trend Index 2023 shows a future that will see AI and humans working hand-in-hand like never before.

Accelerating AI-driven outcomes with Powerful Super Computing Solutions

Dell Technologies’ Mak Chin Wah discusses the importance of AI in advancing human progress and how modern infrastructure must match unique requirements to optimise outcomes.

Adobe Firely, the Next-Generation AI Made for Creative Use

Adobe has announced their latest AI project, Firefly; a regenerative AI tool for various platforms as part of the Adobe Sensei ecosystem.

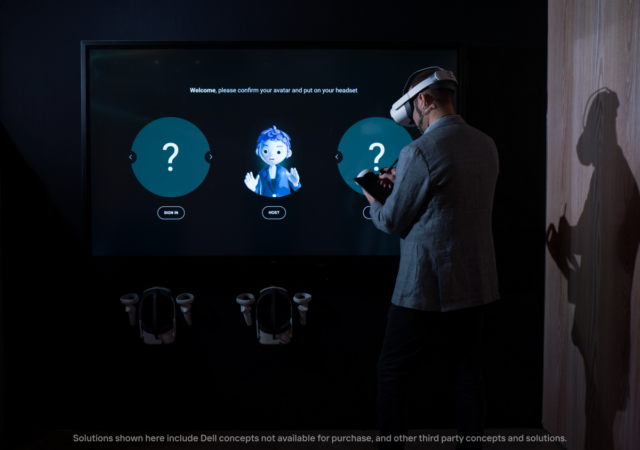

Concept Nyx and Explorations for the Future of Connection

Concept Nyx has been one of Dell’s most interesting developments in recent times but it’s only just the beginning.

Edge Computing Benefits and Use Cases

Red Hat weighs in on the benefits of deploying and utilising edge computing in operations with actual use cases.

Xiaomi ROIDMI EVE Plus Robot Vacuum Review: Keeping up with the Dust Bunnies in a Smart Way

The ROIDMI EVE Plus is a robot vacuum offering in Xiaomi’s stable of IoT devices. We’re giving it the techENT Review to see if it’s worth the hype.