Schneider Electric and NVIDIA join forces to push the boundaries of innovation in data center infrastructure. Explore the future of AI and digital twin technologies.

OPPO Shows Off Air Glass 3 Concept at MWC 2024

OPPO showed off the Air Glass 3 concept device at MWC 2023 that brings a whole lot of improvements and AI-powered features from AndesGPT.

Lenovo’s ThinkPad & ThinkBook Laptops Get Infused with AI Technology

Lenovo announces refreshes to its ThinkPad T series, the ThinkBook 14, ThinkPad X12 Detachable and ThinkBook 14 powered by the Intel Core Ultra at MWC2024.

Navigating the Transformation Paths in the Architecture, Engineering, and Construction (AEC) Industry

Intuitive design software and earth-friendly material are part of the AEC industry evolution. Keeping up with the times with architecture key.

Back to Normal Comes with Recruitment Woes & An Increasing Role for AI in SMEs

Post COVID, SMEs are finding it hard to compete for talent with MNCs. However, they can compete with the right technology and mindset.

Samsung Marries AI with Robot Vacuums in the Bespoke Jet Bot Combo

Samsung teases its AI-applied line up of vacuums with the new Bespoke Jet Bot Combo which intelligently vacuums and mops using AI for better optimisation.

How Technology Changes Company Thinking And Company Performance

This article is contributed by Varinderjit Singh, General Manager, Lenovo Malaysia While I think most of us would expect large organizations to include forward-thinking technology in their overall business strategies, we’re starting to see this with SMBs as well, including…

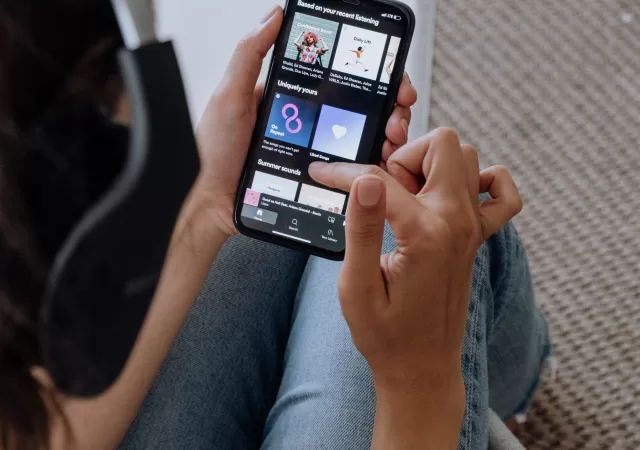

Listeners May Soon Get AI-generated Playlist On Spotify

Before you get all psyched and excited, step on your breaks first. The idea that Spotify users can easily generate a specially curated playlist according to a text prompt, making it much easier and simpler to find music and have…

Adopting New (Virtual and Augmented) Realities for Manufacturing

Augmented & virtual reality is changing the game of the manufacturing industry. AR/VR allows efficiency, enhanced employee training & cost cuts. Contributor Varinderjit Singh from Lenovo Malaysia explains the use of AR/VR in manufacturing.