Unlocking new levels of productivity is not just about the right team, it’s equipping them with the right technologies to succeed.

How To Balance Business Innovation and Operational Excellence

Mr Varinderjit Singh, General Manager at Lenovo Malaysia weighs in on the expanded role of CIOs and how to balance business innovation and operational excellence.

Accelerating AI-driven outcomes with Powerful Super Computing Solutions

Dell Technologies’ Mak Chin Wah discusses the importance of AI in advancing human progress and how modern infrastructure must match unique requirements to optimise outcomes.

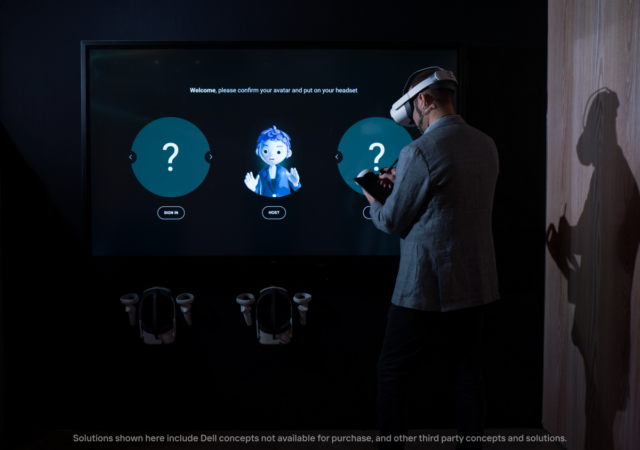

Concept Nyx and Explorations for the Future of Connection

Concept Nyx has been one of Dell’s most interesting developments in recent times but it’s only just the beginning.

Advancing Gender Equality with Online Learning: 5 Ways it’s Making a Difference

Online learning has become a key driver of levelling the playing field for women who continue to strive for excellence in work and life.

Cybercriminals are Ready to Crash Your Holiday Party

The holiday season is a time when we’re celebrating, it’s also notorious for cybersecurity incidences due to the lack of security and automation.

5G Edge & Security Deployment Evolution, Trends & Insights

With 5G taking center stage in 2023, Red Hat takes a look at the 5G Edge and some of the trends and insights driving adoption.

Service Providers: The Digital Link Between Industries, Society & Enterprise IT

Partners and Service Providers play an increasing role in bridging digital transformation goals into plans. Red Hat is weighing in on its role in industry.

Continuing the Pace of Government Innovation in a Post-Pandemic World

Government innovation is key to progressing a country. It cannot be spurred simply by world changing events. Instead, here are some ways that it can continue even now.

Edge Automation: Seven Industry Use Cases & Examples

With edge computing becoming more mainstream, numerous use cases and challenges have emerged. Red Hat shares some of the most pertinent.