- Doubled the sampling speed compared to company’s ‘minDALL-E’ model

- Achieved enhanced quality and faster sampling speed by configuring high-resolution images as low-resolution 3D tensors

- Technology to be presented at global computer vision conference, CVPR 2022

SEOUL, South Korea , April 20, 2022 /PRNewswire/ — Kakao Brain has announced that it published its advanced text-to-image generator Residual-Quantized (RQ) Transformer on open-source community GitHub[1] in late March. RQ-Transformer, the text-to-image AI technology comprised of 3.9 billion parameters and 30 million text-image pairs, significantly improves the quality of generated images while reducing computational costs and achieving a sampling speed that exceeds every other text-to-image generator available worldwide.

RQ-Transformer successfully addresses the high computational costs and slow image generation of existing models. By primarily leveraging the residual quantization technique, which uses a fixed size of codebook to recursively quantize the feature map in a coarse-to-fine manner instead of simply increasing codebook size, RQ-Transformer is able to learn more information in a shorter period of time.

Boasting the highest number of parameters with 3.9 billion in Korea as well as the fastest sampling speed among Kakao Brain’s text-to-image AI models, RQ-Transformer outperforms the 1.4-billion-parameter minDALL-E, another open-source text-to-image model created by Kakao Brain, with double the sampling speed.

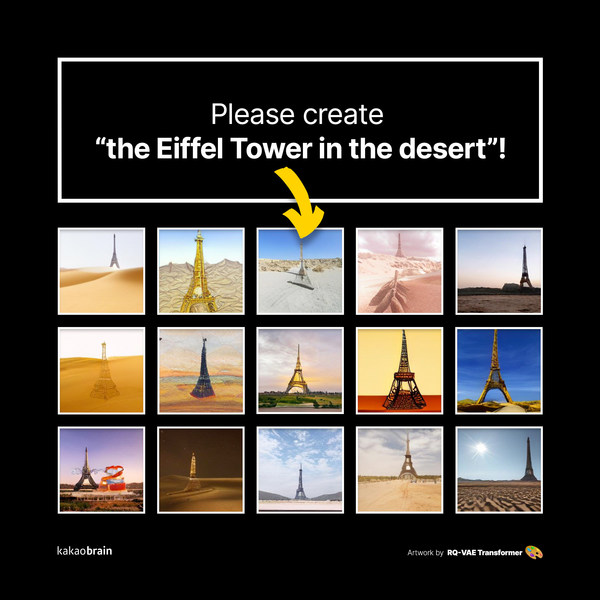

RQ-Transformer can understand text combinations it sees for the first time and create a corresponding image. Sample images generated on the text condition, ‘the Eiffel Tower in the desert,’ are shown below:

RQ-Transformer is just the beginning of Kakao Brain’s technology as it puts forward the fundamental technology that enables rapid image generation while maintaining cutting-edge performance. With this technology as its cornerstone, Kakao Brain plans to strengthen this model and improve the quality of images generated via computer programs, learn more data with greater cost-effectiveness, and build technologies that go beyond simply generating images on fed information to help humans visualize the ideas in their head on screen.

Recognized for its all-around superior approach, the text-to-image technology was selected to be presented at CVPR 2022,[2] an annual global computer vision conference which will be held in June this year. To uphold a high standard in its technologies, Kakao Brain’s Generative Model (GM) Team, in charge of the research & development (R&D) of image generation models, will continue to finetune this model in the pursuit of even more sophisticated images and faster sampling speeds.

“The computer generating images based on human commands signifies the tech’s ability to distinguish and understand the intention behind the demand,” said Kim Il-doo, CEO of Kakao Brain. “We’re incredibly excited to see where this research leads us, and we believe that this revolutionary AI model marks the beginning of the journey to a future where humans and computers can communicate freely.”

More information on RQ-Transformer is available on GitHub at https://github.com/kakaobrain/rq-vae-transformer.

About Kakao Brain

Kakao Brain is a world-leading AI company boasting unparalleled AI technologies and research & development networks. The company was established by Kakao in 2017 to solve some of the globe’s biggest ‘unthinkable questions’ with solutions enabled by its lifestyle-transforming AI technologies. Constantly driving innovation in the world of technology, Kakao Brain has developed numerous groundbreaking AI services and models designed to enhance quality of life for thousands of people, including minDALL-E, KoGPT, CLIP/ALIGN, and RQ-Transformer. As a global pioneer of AI, Kakao Brain has the responsibility of fostering a vibrant tech community and robust R&D ecosystem as it carries out its mission to form new tech markets with endless potential. For more information, visit https://KakaoBrain.com/.

|

[1] GitHub provides internet hosting for software development and is mostly used to host open-source projects. As of November 2021, GitHub is the largest source code host with over 73 million developers and 200 million repositories. |

|

[2] CVPR (Conference on Computer Vision and Pattern Recognition), co-sponsored by Institute of Electrical and Electronics Engineers (IEEE) and The Computer Vision Foundation (CVF) since 1983, is regarded as one of the most acknowledged annual conferences in the computer vision sector, along with European Conference on Computer Vision (ECCV) and International Conference on Computer Vision (ICCV). |

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/kakao-brain-unveils-efficient-text-to-image-generator-rq-transformer-on-github-301527786.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/kakao-brain-unveils-efficient-text-to-image-generator-rq-transformer-on-github-301527786.html