Google has been working on creating a better, more unified experience with their bread and butter – search. The tech giant is looking for a more contextually relevant search as they move forwards. To do this, they are turning to MUM, the Multitask Unified Model, to bring more relevance to search results.

The new Multitask Unified Model (MUM) allows Google’s search algorithm to understand multiple forms of input. It can draw context from text, speech, images and even video. This, in turn, allows the search engine to return more contextually relevant results. It will also allow the search engine to understand searches in a more natural language and make sense of more complex searches. When they first announced MUM, the new enhancement could understand over 75 languages. MUM is much more powerful than the existing algorithm.

Contextual Search is the New Normal

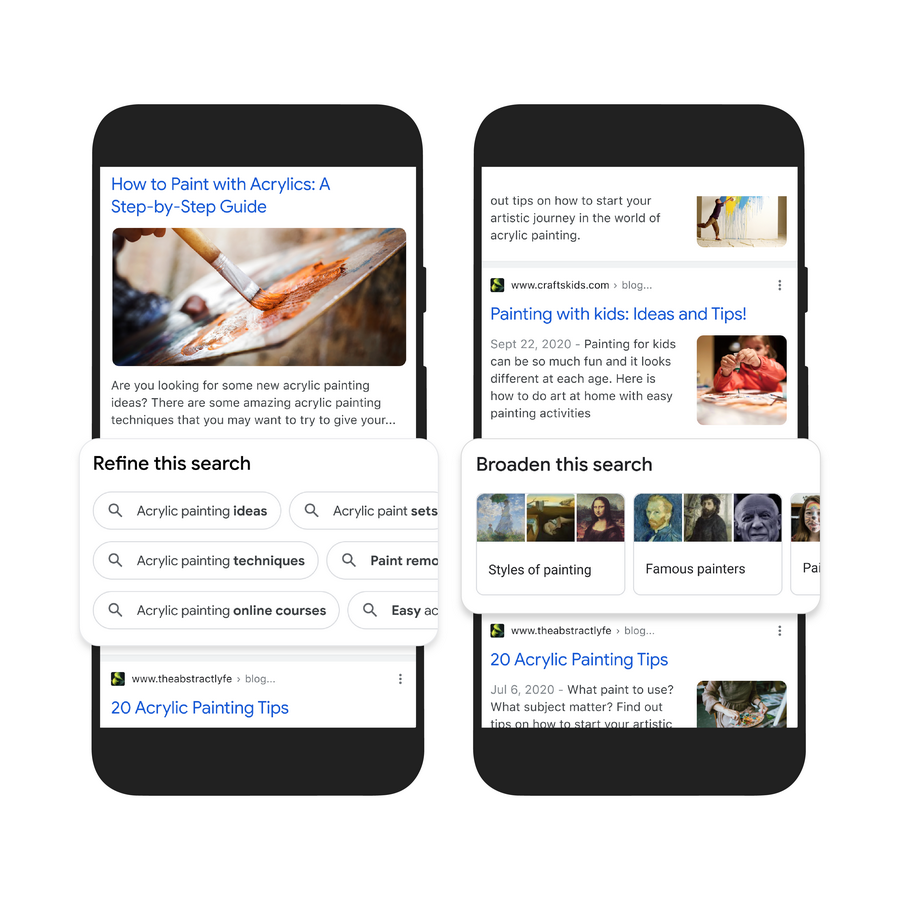

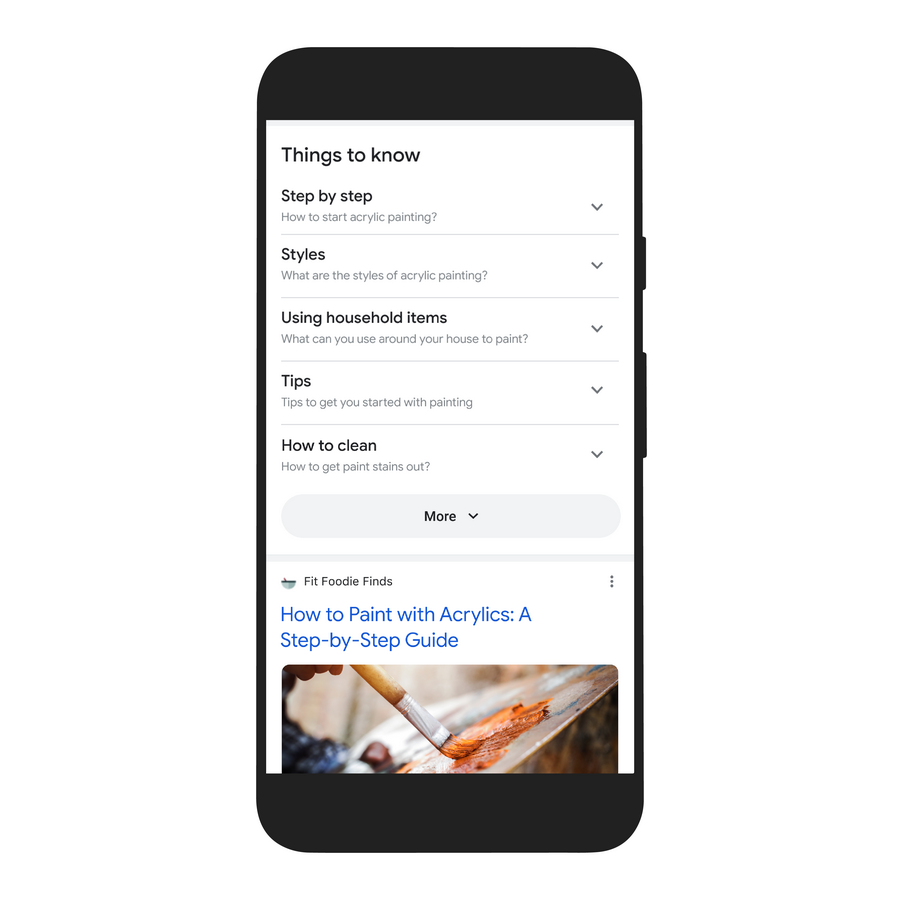

Barely two months after the announcement, Google has begun implementing MUM into some of the most used apps and features. In the coming months, Google searches will be undergoing a bit of a major rehaul. The company is creating a new, more visual search experience. Users will be seeing more images and graphics in search results. you will also be able to refine and broaden searches with a single click thanks to MUM. You will be able to zoom into finer details such as specific techniques and more or get a broader picture of your search with a single click. In their announcement, Google used the example of acrylic painting. With the results from Google search, they were able to zoom in to specific techniques commonly used in acrylic painting or get a broader picture of how it started.

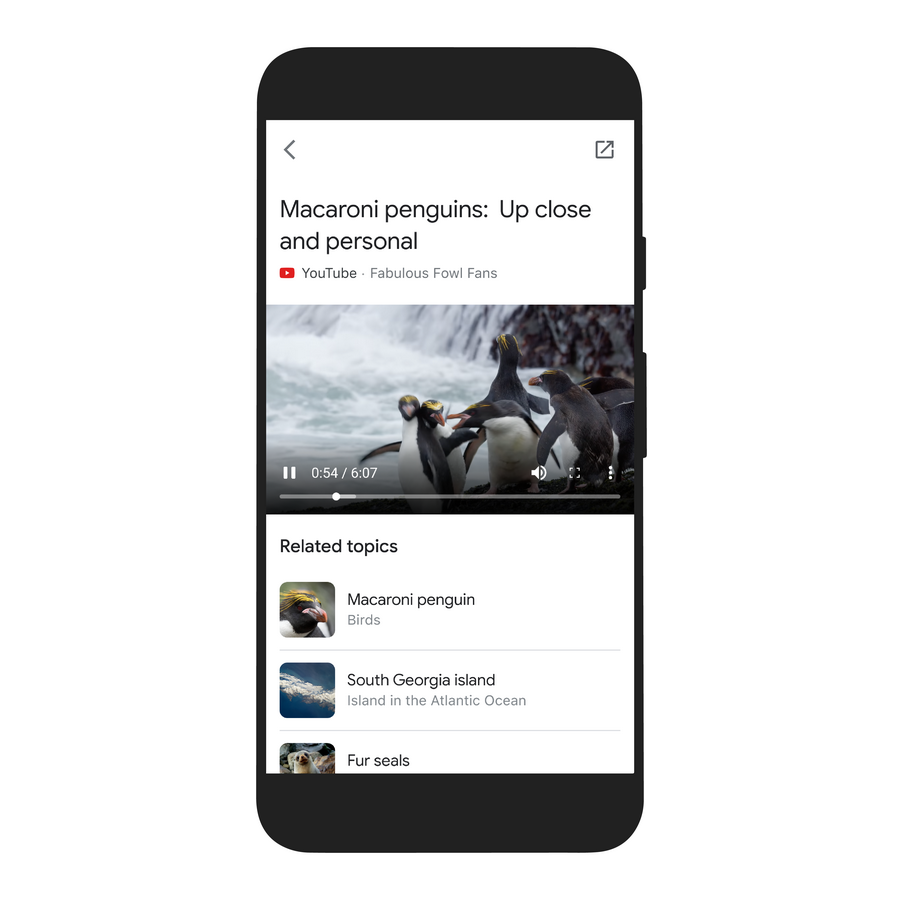

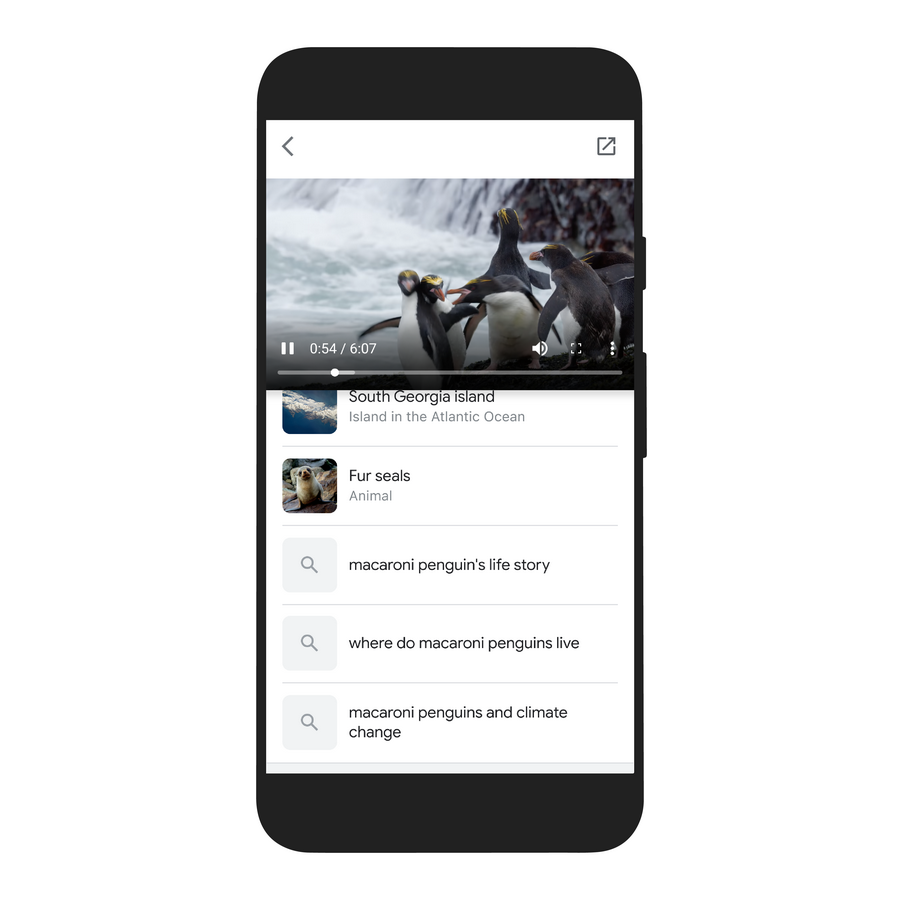

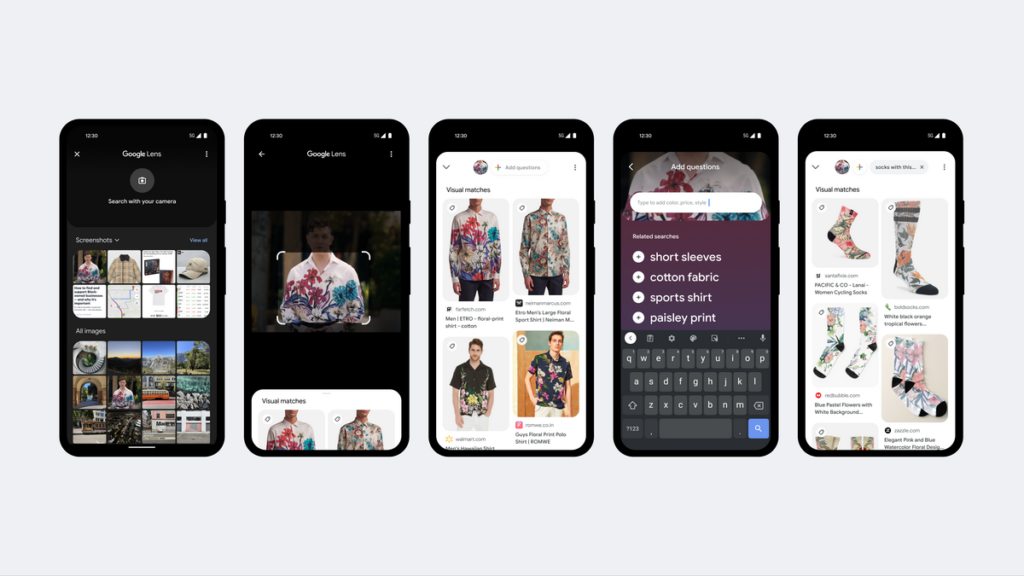

The search engine uses data such as language and even user behaviour in addition to context to recommend broadening or narrowing searches. They are even applying this to YouTube. They are hoping to be able to expand the search context to include topics mentioned in YouTube videos later this year. Contextual and multitask search is also making its way to Google Lens. Lens will be able to make sense of both visual and text data at the same time. It’s also making its way to Chrome. Don’t expect the rollout of the new experience on Lens too soon as the rollout is expected to be in 2022 after internal testing.

Context is also making search more “shoppable”. Google is allowing users to zoom in to specifics when searching. For instance, searching if you’re searching for fashion apparel, you will be able to narrow your search based on design and colour or use the context of the original to search for something else completely. In addition, Google’s Shopping Graph will allow users to narrow searches with an “in stock” filter as well. This particular enhancement will be available in select countries only.

Expanding Search to Make A Positive Impact

Google isn’t just focusing on MUM for its own benefit. The company has been busy bringing its technology to create change too. It’s working on expanding contextual data as well as A.I. implementation in addressing environmental and social issues. While this is nothing new, some of the new improvements could impact us more directly than ever.

Environmental Insights for Greener Cities

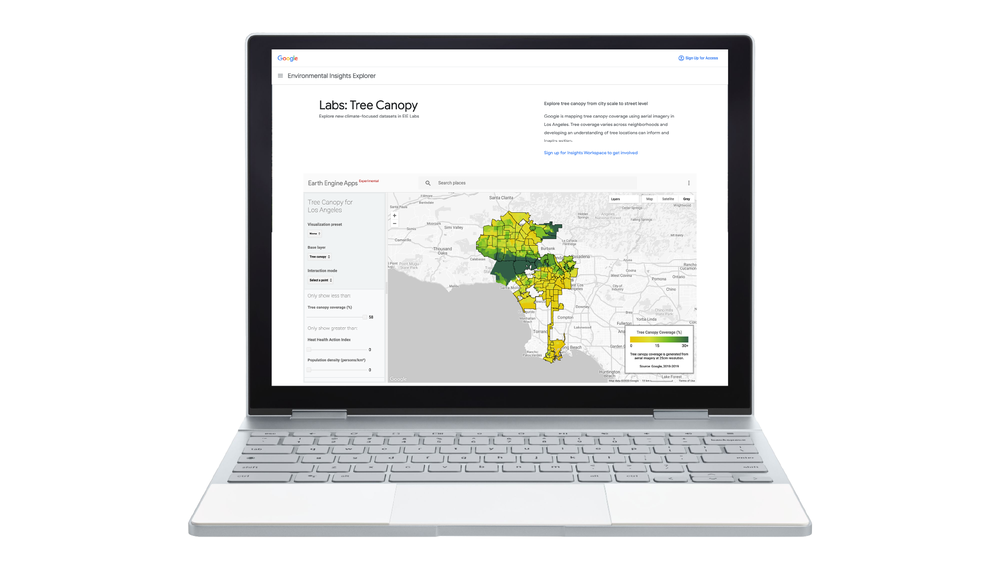

One of the biggest things that could make a huge impact is Googles Environmental Insights. While this isn’t brand new, the company is looking to make the feature more readily available to cities to help them be greener. Environmental Insights Explorer will allow municipalities and city councils to make decisions based on data from A.I. and Google’s Earth Engines.

With this data, cities and municipalities will be able to visualise tree density within their jurisdictions and plan for trees and greenery. This data will help tremendously in lowering the temperatures of cities. It will also help with carbon neutrality. The feature will be expanding to over 100 cities including Yokohama and Sydney this year.

Dealing with Natural Disasters with Actionable Insights

Google Maps will be getting more actionable insights when it comes to natural disasters. Of course, being an American company, their first feature is, naturally more relevant to the U.S. California and other areas have been hit by wildfires with increasing severity in the past years. Other countries such as Australia, Canada and even in parts of the African continent are also experiencing increasingly deadly wildfires. It’s more apparent that data on the wildfires is needed for the public.

As such, Google Maps will be getting a layer that will allow users to see the boundaries of active wildfires. These boundaries are updated every 15 minutes allowing users to avoid affected areas. The data will also help authorities coordinate evacuations and even handling of situations. Google is also doing a pilot for flash flooding in India.

Simplifying Addresses

Google is expanding and simplifying one of its largest social projects – Plus Codes. The project, which was announced just under a year ago, is getting more accessible. Google is making Plus Codes more accessible with Address Maker. The new app continues with Plus Codes but allows users and organisations simplified access to making new addresses. Governments and NGOs will be able to create addresses at scale easier.